We released the Goals Module in our application suite no more than four weeks ago, and in this time almost 460 goals have been created by our users. This is great news, because it means that the few months that we spent working on the first release was definitely time well spent.

The first release of Goals was definitely a Minimum Viable Product, and today we would like to share with you some useful practices that we have learned during the development process and which are related to building good MVPs.

Use mockup activities to test your assumptions

Allowing users to define goals based on the results of their SWOT analyses was one of the possible features we had in mind. Nevertheless, there is one specific problem with your own ideas — each and every one of them is the best one! We needed to validate whether this particular feature was useful not only for us, but also for our current and future users.

We defined a simple hypothesis:

The SWOT analysis module user wants to create objectives based on recommended strategies and results of the analysis.

One of the most common methods of validating such assumptions is a direct interview with the customers. This method is great, but it is also time consuming and very often hard to perform when your target audience is not yet precisely specified. Because of that, I advocate another method: giving the users the immediate possibility of performing some action in the application.

Instead of asking, let’s give our users the option to perform a specific activity, and later on count how many times users tried to perform that action. On the SWOT analysis summary page we have created a clear call to action, which gave the user the ability to create a list of goals based on their SWOT analysis results.

We could have waited with showing this option until the Goals Module would be actually finished and the action would be possible to be made. But, what if no one would click the button after release? We would have had an unwanted feature in our application and wasted our development time.

Instead, we published the mockup version of a button when a new module wasn’t ready yet. The mockup button didn’t create any goals — instead, it showed the user a popup with the information that we are currently working on this feature and the ability to leave an email address if a person is interested in the functionality.

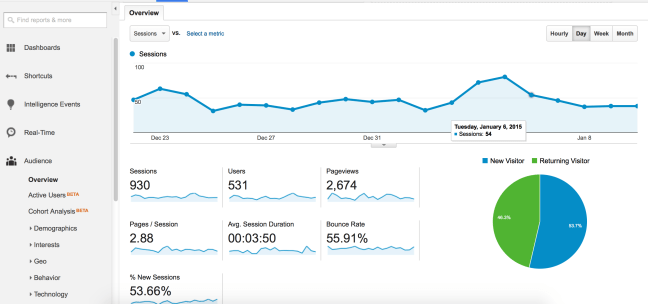

We started collecting users’ email addresses and additionally, using Google Analytics, started counting the number of unique clicks on the button. This gave us two pieces of information: how many users would use the feature in the application, and how many users are so interested in the feature that they wanted to give us their email addresses and request to contact them directly as soon as the module was released.

During the experiment, we recorded almost 350 clicks at the mockup button, and more than 60 users had signed up for the Goals Module. Because we were advertising very little back then, we knew that this number was a very good sign and the direction we chose was the right one.

Interpretation of collected data is a real challenge

As with any statistics, you must be careful. Having 100% of users interested in a particular new feature of your product probably indicates a bug in your analytics module; having 60% of users interested is a good sign, but doesn’t say anything for sure; on the other hand, if only 2% of your users want a specific feature it is a sign that maybe this is not a must-have feature.

Of course, the hardest part is deciding what exact percentage is sufficient for us: whether 40% is enough, or do we need no less than 60%? For me, it was always annoying that I had read so many startup books and articles but still wasn’t able to get a single and simple answer to these questions: how much user interest is enough; which conversion rate is enough?

Now, I realized that I wasn’t able to find this out, because there is no single, simple answer. Or there is — it depends… The answer can be based on various factors, let me list a few examples:

- which stage of the product development are we on?,

- do we have any time pressure?,

- what are our costs and how much money do we have?,

- what is the average conversion rate for a given marketing channel or a given market?

- … and so on.

In our case, we decided that the numbers were promising, but you have to remember that every case is different.

Take advantage of your uniqueness

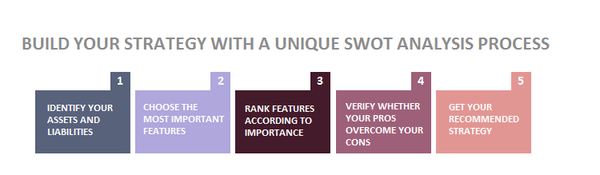

One of the features which made CayenneApps SWOT stand out from the competition was what we called: a process-driven design. The majority of existing SWOT solutions on the one hand allowed users to create a simple matrix with assets and liabilities, but on the other hand left them alone during the creation process.

In our solution we believed (and still believe!) that our users deserved more attention — that is why we created a unique wizard, which guided the user, starting with listing SWOT features, through selecting, evaluating and connecting features to the final step where the user received his strategy recommendation.

Even though the next module — Goals — was in many aspects different, we wanted to keep this uniqueness among all of our modules. We started with a very simple thought: if you are about to create a new feature, make sure that it still preserves the specific thing that makes your product unique. I personally think that this kind of mindset helps us to keep our work consistent.

To give you an example imagine a web application which is described as: lightning fast. Then, in one of the consecutive releases the new features are about to be added — we want to deliver and validate the feature the quickest we can, so we develop a new release as a Minimum Viable Product. But, due to a number of simplifications, our new version becomes horribly slow. It still delivers the value, its impact can be measured, but the unique feature of our product is lost. This means that the feedback we gather from users can be seriously biased. Our users expect the performance to be excellent, so even though the might like the new feature, they don’t use it and our analytical results can be skewed.

Of course, this does not mean that the uniqueness factor should the only criterion that we have in mind when we prioritize the features for the next release. Adding new (or removing old) features gives us flexibility and allows us to open our product to new markets. Additionally, every part of the product which is not single-feature is specific and sometimes needs a different type of design mindset.

How we reconciled uniqueness with a minimum product in our tool

During the development of the Goals Module we had a few discussions about how to shape the final layout. On the one hand, the easiest way would have been to create a simple list of user’s goals, but on the other hand we wanted to create the module so it would be part of a continuous improvement process. We wanted to empower the power users and give them the ability to play with the application, but at the same time we wanted to preserve the process-design that allowed users to follow the simple step-by-step process, that they were already familiar with from the SWOT analysis module.

Eventually, we decided to go with a mixed approach. We wanted to help our users to focus on one thing at a time. We introduced an easy to use wizard which helped users to create their goals and prioritize them step by step. In the first step, the user could only list his/her goals — the next step of the process allowed them to prioritize their goals, choose the most important ones and then mark them as urgent or long-term.

But at the same time, we didn’t restrain our users by forcing them to fill in the goal attributes at the very beginning. I remember how annoyed I felt when one of the goal tracking applications available on market forced me to fill in eleven required (!) fields before submitting a goal creation form. That is why, in CayenneApps Goals each goal has its own status and users can see at a glance which attributes of the goal are missing, and they are able to fill them in anytime they want.

The point is that the approach we chose was not the smallest or easiest one — it required more development, but with this solution we were able to deliver the highest value to our customers and still be consistent with our unique approach.

Use a measure-first design

Choosing one solution over another is definitely challenging, but very often it is only the first step thats needs to be made. The next step is to actually understand how the users use our application. This is something that I call a measure-first design. By designing an application with the measure-first approach, we make sure that the popularity or usability of a feature that we build can be easily measured. Without metrics, as product creators we expose ourselves to the threat of being blind to our users’ needs. Properly obtained metrics allow us to have a better understanding of the real-life usage scenarios of our application.

When dealing with the Minimum Viable Product, people tend to focus on the word “minimum”, believing that the product they are building should have a minimal number of features. But actually, as I wrote in the post about the different faces of MVP, one of the most important goals of Minimum Viable Product is to help you to learn. We can produce a minimal set of features for our next release, but if we don’t measure how they affect our users’ behavior the whole point of creating an MVP is lost.

To make the user flow measurable, we use the Google Analytics platform and record various activities of our users. In the Goals Module, we set up many different event handlers, which record user actions anonymously. Google Analytics custom events show us which parts of the user layout are used more often than others and what kind of referral traffic performs best. Of course, it is very hard to predict all the potential actions of your users and set up all event handlers ahead of time. In these types of cases you can use another tool, Crazy Egg, which can help you to track the areas which are not covered by custom events.

Of course, statistics (especially the ones compiled about a low volume of users) cannot define the whole picture. Google Analytics custom events will count the total number of clicks, but won’t record accurately if the user has been searching a particular action too long. As you can learn from Steven Krug’s famous book “Don’t make me think”, direct interaction with users is essential and we cannot overlook the importance of it. Nevertheless, in my opinion, sometimes placing one button on our website and counting the actual number of clicks can tell us more about our assumptions than a dozen interviews with our potential customers.

Summary

[Tweet “Developing a good product is hard, but developing a good MVP is even harder.”]

It is a constant tug of war between different priorities and different ideas, but some techniques make the whole MVP development process a bit easier. From my experience, Story Mapping is one of the best techniques I know for building an MVP scope. Mockup actions can be very helpful in testing different behavioral patterns of your users. Remembering about uniqueness allowed us to focus on the most important issues and the measure-first approach helped us to ensure that MVP is testable.

All these methods has helped us to build our Goals Module, which we are very proud of. But, I am very eager to learn even more methods which are helpful in building and testing an MVP. Do you know of or have you used any? Please, share them in your comments!

[…] Trompez-vous, plus vite que ça ! 10 outils en 10 minutes. The Lean Finance Model Of Venture Capital. A lesson of lean startup by Lukas Fittl. Lean Startup Essentials - Le Camping Edition. Création d'entreprise ! Lean Launch Lab. 3 lessons learned when developing a new product with the Minimum Viable Product. […]